Why we created this database

At present, SAI research is fragmented and underpowered. Different groups are chasing their own—often interesting—questions, but there's no shared view of:

- which uncertainties actually matter for real-world decisions, and

- what it would take to reduce them.

This leaves funders guessing where to place resources, researchers second-guessing what's relevant, and policymakers skeptical that the SAI field can ever deliver actionable guidance.

Our hypothesis is that we can improve on this if we:

- Make key technical uncertainties visible and comparable so that the field can converge more quickly on which questions really matter for decisions about SAI.

- Separate "how uncertain we are" from "how much it matters for decisions" so that we can prioritize work that might actually change decision-making in addition to filling knowledge gaps.

- Link each uncertainty to when it could realistically be reduced/resolved along a path from models to experiments to deployment so that we can design research programs and funding portfolios that are both ambitious and realistic.

This SAI Uncertainty Database is our first attempt to do that: a dynamic, public, scientifically-grounded assessment of the key technical uncertainties in SAI. We hope the database helps:

- Researchers select projects that answer the most decision-relevant questions.

- Funders build portfolios that systematically reduce high-leverage uncertainties.

- Policymakers see where the science is strong, where it's limited, and what it would take to improve it.

- Form an initial foundation to create a transparent, prioritized, stage-gated SAI research roadmap.

How we built it

For a more detailed and technical description of our process, please see our methodology.

Drawing on team expertise, literature review, and early conversations with external experts, the Reflective team followed three main steps to build out the database:

- Define the space of technical uncertainties: We identified the main technical uncertainties that must be understood for informed decisions about SAI and split them up into four categories:

- Aerosol Evolution

- Climate Response

- Earth System Response

- Engineering

- Describe and score each uncertainty: We populated the following fields for each entry:

- Metric: A specific, measurable "what-if" scenario, such as a specific amount of ozone loss or change in AMOC strength. The metric must be quantitative to allow an estimate of how likely it is and why it matters for decisions.

- Level of Uncertainty: How likely are we to be wrong about this uncertainty? More specifically, how likely is our current best estimate of a specific quantity which represents this uncertainty to be wrong by an amount quantified in the metric? This is listed on a scale of low to high.

- Decision Relevance: How much would decision-making on SAI be impacted if we are wrong about the uncertainty? This is listed on a scale of low to high.

- Resolvability scale: At what point in the following pathway could this uncertainty plausibly be materially reduced.

- In silico (modeling and lab work)

- Small-scale experiments

- Larger-scale field trials

- Discernible surface climate impact

- Long-term sustained deployment

Scope and limitations

To keep this effort honest and tractable, we made a few conscious choices:

- Solely technical scope. This database focuses on technical uncertainties only. We do not attempt to capture societal, governance, or geopolitical uncertainties here, even though we view them as indispensable parts of any eventual decision-making framework for SAI.

- Scenario bounded. To bound the scope, we adopt specific scenario assumptions (e.g., about injection strategy and timescales). The scenario itself is a major open question, and we expect to revisit and expand these assumptions over time.

- No normative conclusions. The database does not answer whether SAI is a good or bad idea. It clarifies what we would need to know to enable robust, informed decision-making on SAI.

We expect both the entries and these scoping choices to evolve as the field and community input grow.

How to use the database

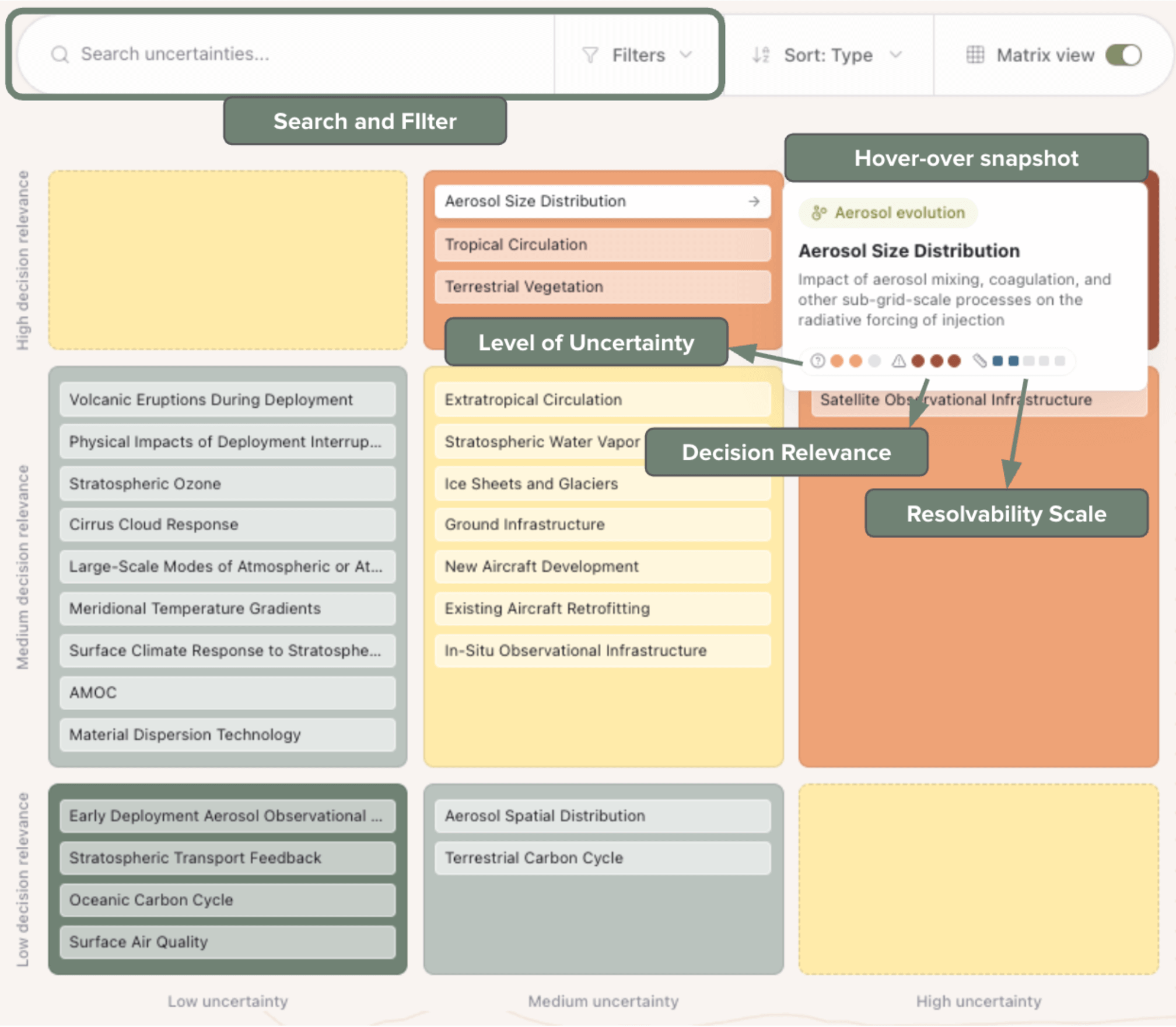

On the landing page, you'll see a matrix of uncertainties across the four categories. You can:

- Scan the matrix for an overview. Hover over any uncertainty to see a short description and key quantitative details, including our assessment of its uncertainty level, decision relevance, and resolvability scale.

- Filter to what you care about. Use the search and filter tools to narrow by:

- Category (e.g., Climate Response)

- Level of Uncertainty

- Decision Relevance

- Resolvability scale

This can help you quickly find, for example, "high decision-relevance uncertainties that could be resolved before any large-scale deployment."

- Click into individual uncertainties. Each entry includes a more detailed description of:

- What the uncertainty encompasses

- Why we placed it where we did on the matrix

These pages are intended to help researchers, funders, and policymakers identify concrete directions for work.

- Give feedback and improve the database. At the top right of each uncertainty page—and at the bottom of every page—you'll find a link to suggest edits or additions. Useful feedback might include:

- Proposing changes to the uncertainty level, decision relevance, or resolvability scale

- Suggesting more intuitive or decision-aligned metrics

- Recommending uncertainties to add, remove, or merge

- Highlighting process improvements or missing dimensions we should track

- Flagging elements that should feed into a future SAI research roadmap

A community resource

Reflective maintains the database, but we do not view it as "ours" alone. Version 1.0 has already been shaped by external reviewers and by Reflective's Scientific Advisory Board. Their input changed what is included, how it is grouped, and how we score and describe many uncertainties.

We expect—and invite—feedback through the feedback link at the top right of each uncertainty page and the bottom right of every page on topics including those listed above.

Our aim is for this to become a shared resource for the SAI research and policy community: transparent enough to critique, structured enough to build on, and flexible enough to improve over time. Please see the "Management Policies" section of the Methodology to learn more about our process for keeping the database up to date.

Thank you

We're deeply grateful to the researchers and practitioners who generously shared their time and expertise to inform this first release. We'd also like to thank Jake Dexheimer for his excellent work building out the database website as well as Frontier Climate for providing inspiration through their carbon removal knowledge gaps database. Questions or interested to learn more? Email us at roadmap@reflective.org.